Subscribe to:

Post Comments (Atom)

Archives for RSS Returns : History as Seen by Bloggers "I would rather have questions that can't be answered than answers that can't be questioned" Richard Feynman "The penalty good people pay for not being interested in politics is to be governed by people worse than themselves." - Plato, Greek philosopher.

Classified Conspiracy Theory

Search This Blog

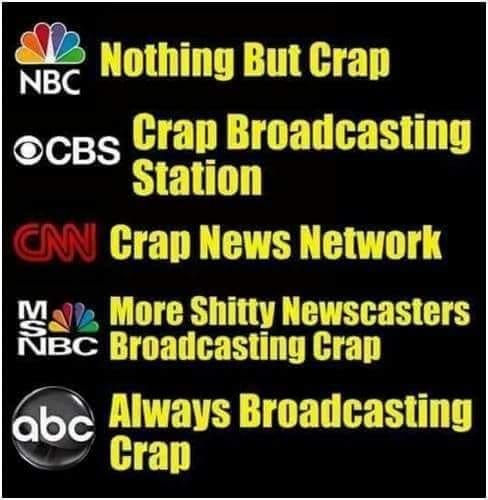

Media Consolidation

Fair Use Notice

WARNING for European visitors: European Union laws require you to give European Union visitors information about cookies used on your blog. In many cases, these laws also require you to obtain consent. As a courtesy, we have added a notice on your blog to explain Google's use of certain Blogger and Google cookies, including use of Google Analytics and AdSense cookies. You are responsible for confirming this notice actually works for your blog, and that it displays. If you employ other cookies, for example by adding third party features, this notice may not work for you. Learn more about this notice and your responsibilities.

Search This Blog

One Planet

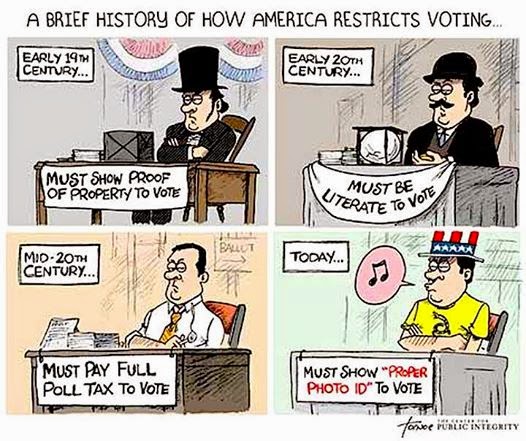

Free Country

Noted Articles

Search published scientific literature

You.com ( ad free Search )

Why Craig Kelly resigned on Tuesday ( post FB censorship)

Barrington Declaration ( ripping a strip off Covid-19 policy)

Collateral Global Project studying outcomes of policies

A Henchman Balks : Digby ( Is it sad...or hysterically funny?)

Livejournal - RSS Syndication

Korean professor on COVID-19 ( likes masks)

Sidebar lists of Notable Articles - Livejournal - Tagfile

SIDEBAR INDEX - Notable Articles reposted to LiveJournal

Disqus : o.p.i.t.

Public Dashboard : opit RSS Netvibes 2 3 4 5

Book Love Foundation

Civil Newsrooms : Blockchain

Seen This : About ( French )

Fossil Fuel Subsidies ( kindly find them )

When feeding the desperate becomes a crime ( despicable law)

https://file.wikileaks.org/file/ ( Assange arrest 'celebration' )

Religious Discrimination Bill : Australia ( lawyer employment initiative )

Aquatic Ape Pt 1 ( YouTube )

Ambassador of Controversy : Pt 1 Craig Murray

The Bremer Edicts ( Iraq )

300 free online courses from Ivy League universities

Secretmag.ru BookBub Signs of the times DQ World

Secretmag.ru BookBub Signs of the times DQ World

The Knockout Punch The real climate record explained

YouTube Censorship Defends Powerful ( Watch the clip )

Floodgap HT Opera

Body Count pdf

IDEAS Economics Research Database - Federal Reserve

anh

Pages

NSA was so secret its name was hidden

BiPartisan Tyranny

History of Education

Clean Coal

Clustermap

Translate

Blog Archive

-

▼

2016

(1200)

-

▼

December

(109)

- 31 December - Blogs I'm Following

- 30 December - igoogle portal - old 2

- 30 December - igoogle portal - old

- 30 December - igoogle portal

- 30 December - My Yahoo! 2

- 30 December - My Yahoo!

- 30 December - Pale Moon Start Page - Tech News and...

- 30 December - Blogs I'm Following

- 29 December - Blogs I'm Following

- 28 December - Blogs I'm Following

- 27 December - Blogs I'm Following

- 27 December - My Yahoo! 2

- 27 December - My Yahoo!

- 27 December - Pale Moon Start Page - News and Tech...

- 27 December - Netvibes 3 - 3

- 27 December - Netvibes 3 - 2

- 26 December - Blogs I'm Following

- 26 December - The View 2

- 25 December - The View

- 25 December - Blogs I'm Following

- 25 December - Netvibes 3 ( 1 of 2 )

- 25 December - Netvibes 2 ( 2 of 2 )

- 25 December - Netvibes 2 ( 1 of 2 )

- 25 December - Netvibes - oldephartteintraining

- 25 December - Netvibes - Breaking News

- 25 December - Pale Moon Start Page - News ( Canada )

- 25 December - Netvibes ( 3 of 3 )

- 25 December - Netvibes ( 2 of 3 )

- 25 Dec - Netvibes ( 1 of 3 )

- Sustainable Pulse

- 24 December - pm - Blogs I'm Following

- 24 December - am - Blogs I'm Following

- 23 December - igoogle portal old 2

- 23 December - igoogle portal old

- 23 December - igoogle portal

- 23 December - My Yahoo! 2

- 23 December - My Yahoo!

- 22 December - Blogs I'm Following

- 21 December - Blogs I'm Following

- 20 December - pm - Blogs I'm Following

- 20 December - Netvibes 5 - 2

- 20 December - Netvibes 5 - 1

- 20 December - Pale Moon Start Page - Tech News

- 20 December - Pale Moon Start Page - US News

- 20 December - am - Blogs I'm Following

- 18 December - Blogs I'm Following

- 17 December - Blogs I'm Following

- 17 December - Pale Moon Start Page - News ( Canada )

- 16 December - Blogs I'm Following

- 16 December - igoogle portal - old #2

- 16 December - igoogle portal - old

- 16 December - igoogle portal

- 16 December - My Yahoo! 2

- 16 December - My Yahoo!

- 16 December - Pale Moon Start Page - Tech News

- 16 December - Pale Moon Start Page News ( US )

- 15 December - Blogs I'm Following

- 14 December - Blogs I'm Following

- 13 December - pm - Blogs I'm Following

- 13 December - am - Blogs I'm Foillowing

- 12 December - Netvibes - Breaking News

- 12 December - Netvibes - oldephartteintraining

- 12 December - Netvibes 5

- 12 December - Netvibes 4

- 12 December - Netvibes 3

- 12 December - Netvibes 2

- 12 December - Netvibes

- 11 December - Blogs I'm Following

- 11 December - Steve Lendman Blog

- 11 December - My Feedly! 2

- 11 December - My Feedly!

- 11 December - Quick Picks

- 11 December - igoogle portal

- 11 December - old igoogle portal 2

- 11 December - old igoogle portal

- 11 December - Pale Moon Start Page - Tech News

- 11 December - Pale Moon Start Page - US News

- 11 December - My Yahoo! 2

- 11 December - My Yahoo!

- 11 December - Ancient Code

- 10 December - Blogs I'm Following

- 9 December - Blogs I'm Following

- 9 December - Pale Moon Start Page - News ( Canada )

- 8 December - Blogs I'm Following

- 7 December - Blogs I'm Following

- 7 December - Netvibes - oldephartteintraining

- 7 December - Netvibes - Breaking News

- 7 December - Netvibes 5

- 7 December - Netvibes 4

- 7 December - Netvibes 3

- 7 December - Netvibes 2

- 7 December - Netvibes

- 6 December - Blogs I'm Following

- 5 December - Blogs I'm Following

- 5 December - Blazing Cat Fur - RSS SnapShot!

- 5 December - My Yahoo! 2

- 5 December - My Yahoo!

- 5 December - igoogle portal ( yet another result )

- 5 December - old igoogle portal

- 5 December - igoogle portal

-

▼

December

(109)

About Me

- opit

- I've been 'around' for a few years now, pursuing the shifting goal of a sharable home-made surfers resource site focused on ease of use and variety of mostly adult ( whoa : I didn't say prurient ) content.

No comments:

Post a Comment